loss

loss

Samples

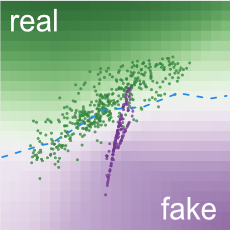

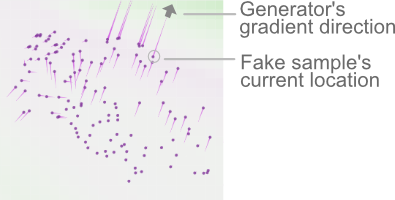

Samples in green regions are likely to be real; those in purple regions likely fake.

Opacity encodes density: darker purple means more samples in smaller area.

This sample needs to move upper right to decrease generator's loss.

Many machine learning systems look at some kind of complicated input (say, an image) and produce a simple output (a label like, "cat"). By contrast, the goal of a generative model is something like the opposite: take a small piece of input—perhaps a few random numbers—and produce a complex output, like an image of a realistic-looking face. A generative adversarial network (GAN) is an especially effective type of generative model, introduced only a few years ago, which has been a subject of intense interest in the machine learning community.

You might wonder why we want a system that produces realistic images, or plausible simulations of any other kind of data. Besides the intrinsic intellectual challenge, this turns out to be a surprisingly handy tool, with applications ranging from art to enhancing blurry images.

The idea of a machine "creating" realistic images from scratch can seem like magic, but GANs use two key tricks to turn a vague, seemingly impossible goal into reality.

The first idea, not new to GANs, is to use randomness as an ingredient. At a basic level, this makes sense: it wouldn't be very exciting if you built a system that produced the same face each time it ran. Just as important, though, is that thinking in terms of probabilities also helps us translate the problem of generating images into a natural mathematical framework. We obviously don't want to pick images at uniformly at random, since that would just produce noise. Instead, we want our system to learn about which images are likely to be faces, and which aren't. Mathematically, this involves modeling a probability distribution on images, that is, a function that tells us which images are likely to be faces and which aren't. This type of problem—modeling a function on a high-dimensional space—is exactly the sort of thing neural networks are made for.

The big insights that defines a GAN is to set up this modeling problem as a kind of contest. This is where the "adversarial" part of the name comes from. The key idea is to build not one, but two competing networks: a generator and a discriminator. The generator tries to create random synthetic outputs (for instance, images of faces), while the discriminator tries to tell these apart from real outputs (say, a database of celebrities). The hope is that as the two networks face off, they'll both get better and better—with the end result being a generator network that produces realistic outputs.

To sum up: Generative adversarial networks are neural networks that learn to choose samples from a special distribution (the "generative" part of the name), and they do this by setting up a competition (hence "adversarial").

GANs are complicated beasts, and the visualization has a lot going on. Here are the basic ideas.

First, we're not visualizing anything as complex as generating realistic images. Instead, we're showing a GAN that learns a distribution of points in just two dimensions. There's no real application of something this simple, but it's much easier to show the system's mechanics. For one thing, probability distributions in plain old 2D (x,y) space are much easier to visualize than distributions in the space of high-resolution images.

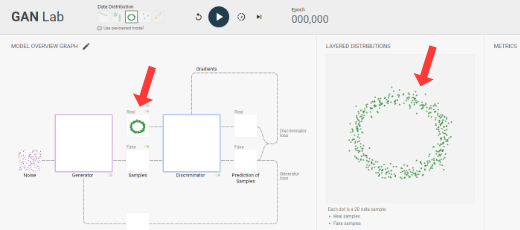

At top, you can choose a probability distribution for GAN to learn, which we visualize as a set of data samples. Once you choose one, we show them at two places: a smaller version in the model overview graph view on the left; and a larger version in the layered distributions view on the right.

We designed the two views to help you better understand how a GAN works to generate realistic samples:

(1) The model overview graph shows the architecture of a GAN, its major components and how they are connected, and also visualizes results produced by the components;

(2) The layered distributions view overlays the visualizations of the components from the model overview graph, so you can more easily compare the component outputs when analyzing the model.

To start training the GAN model, click the play button (![]() ) on the toolbar. Besides real samples from your chosen distribution, you'll also see fake samples that are generated by the model. Fake samples' positions continually updated as the training progresses. A perfect GAN will create fake samples whose distribution is indistinguishable from that of the real samples. When that happens, in the layered distributions view, you will see the two distributions nicely overlap.

) on the toolbar. Besides real samples from your chosen distribution, you'll also see fake samples that are generated by the model. Fake samples' positions continually updated as the training progresses. A perfect GAN will create fake samples whose distribution is indistinguishable from that of the real samples. When that happens, in the layered distributions view, you will see the two distributions nicely overlap.

Recall that the generator and discriminator within a GAN is having a little contest, competing against each other, iteratively updating the fake samples to become more similar to the real ones. GAN Lab visualizes the interactions between them.

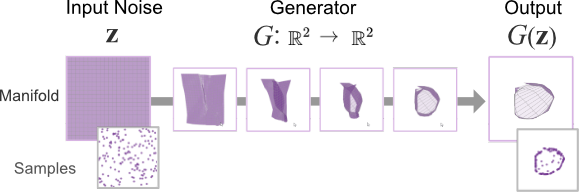

Generator. As described earlier, the generator is a function that transforms a random input into a synthetic output. In GAN Lab, a random input is a 2D sample with a (x, y) value (drawn from a uniform or Gaussian distribution), and the output is also a 2D sample, but mapped into a different position, which is a fake sample. One way to visualize this mapping is using manifold [Olah, 2014]. The input space is represented as a uniform square grid. As the function maps positions in the input space into new positions, if we visualize the output, the whole grid, now consisting of irregular quadrangles, would look like a warped version of the original regular grid. The area (or density) of each (warped) cell has now changed, and we encode the density as opacity, so a higher opacity means more samples in smaller space. A very fine-grained manifold will look almost the same as the visualization of the fake samples. This visualization shows how the generator learns a mapping function to make its output look similar to the distribution of the real samples.

Discriminator. As the generator creates fake samples, the discriminator, a binary classifier, tries to tell them apart from the real samples. GAN Lab visualizes its decision boundary as a 2D heatmap (similar to TensorFlow Playground). The background colors of a grid cell encode the confidence values of the classifier's results. Darker green means that samples in that region are more likely to be real; darker purple, more likely to be fake. As a GAN approaches the optimum, the whole heatmap will become more gray overall, signalling that the discriminator can no longer easily distinguish fake examples from the real ones.

In a GAN, its two networks influence each other as they iteratively update themselves. A great use for GAN Lab is to use its visualization to learn how the generator incrementally updates to improve itself to generate fake samples that are increasingly more realistic. The generator does it by trying to fool the discriminator. The generator's loss value decreases when the discriminator classifies fake samples as real (bad for discriminator, but good for generator). GAN Lab visualizes gradients (as pink lines) for the fake samples such that the generator would achieve its success.

This way, the generator gradually improves to produce samples that are even more realistic. Once the fake samples are updated, the discriminator will update accordingly to finetune its decision boundary, and awaits the next batch of fake samples that try to fool itself. This iterative update process continues until the discriminator cannot tell real and fake samples apart.

GAN Lab has many cool features that support interactive experimentation.

GAN Lab uses TensorFlow.js, an in-browser GPU-accelerated deep learning library. Everything, from model training to visualization, is implemented with JavaScript. You only need a web browser like Chrome to run GAN Lab. Our implementation approach significantly broadens people's access to interactive tools for deep learning. The source code is available on GitHub.

GAN Lab was created by Minsuk Kahng, Nikhil Thorat, Polo Chau, Fernanda Viégas, and Martin Wattenberg, which was the result of a research collaboration between Georgia Tech and Google Brain/PAIR. We also thank Shan Carter and Daniel Smilkov, Google Big Picture team and Google People + AI Research (PAIR), and Georgia Tech Visualization Lab for their feedback.

For more information, check out research paper: