K-means segmentation

Hello. I have a grayscale image with a mole and skin which I want to segment with K-means algorithm.I want the mole pixels to be classified in class 1 and the skin pixels to be classified in class2. How can I do that? The code above works, but sometimes the mole is black and sometimes is white. I want this to be done with k-means segmentation, I know it can be done in other different ways.

Prashant Kumar answered .

2025-11-20

Prashant Kumar answered .

2025-11-20

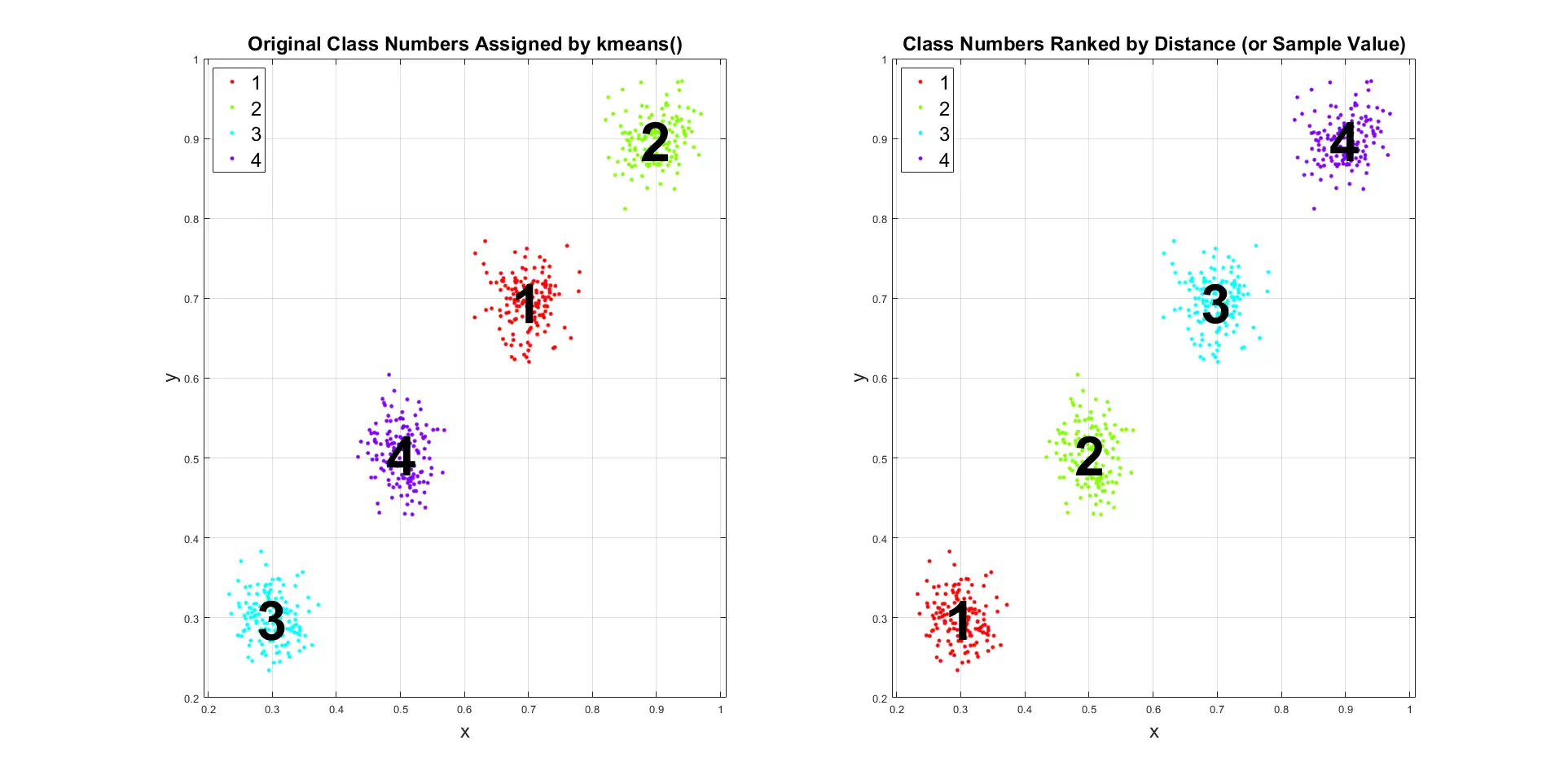

The class numbers that kmeans() assigns can vary from one run to the next because it uses random numbers. However you can renumber the class labels if you know something about the class, like you always want class 1 to be the darker class, and class 2 to be the lighter class. See demo Code .

]

]

% Demo to show how you can redefine the class numbers assigned by kmeans() to different numbers.

% In this demo, the original, arbitrary class numbers will be reassigned a new number

% according to how far the cluster centroid is from the origin.

clc; % Clear the command window.

close all; % Close all figures (except those of imtool.)

clearvars;

workspace; % Make sure the workspace panel is showing.

format long g;

format compact;

fontSize = 18;

%===========================================================================================================================================

% DEMO #1 : RELABEL ACCORDING TO DISTANCE FROM ORIGIN.

%===========================================================================================================================================

%-------------------------------------------------------------------------------------------------------------------------------------------

% FIRST CREATE SAMPLE DATA.

% Make up 4 clusters with 150 points each.

pointsPerCluster = 150;

spread = 0.03;

offsets = [0.3, 0.5, 0.7, 0.9];

% offsets = [0.62, 0.73, 0.84, 0.95];

xa = spread * randn(pointsPerCluster, 1) + offsets(1);

ya = spread * randn(pointsPerCluster, 1) + offsets(1);

xb = spread * randn(pointsPerCluster, 1) + offsets(2);

yb = spread * randn(pointsPerCluster, 1) + offsets(2);

xc = spread * randn(pointsPerCluster, 1) + offsets(3);

yc = spread * randn(pointsPerCluster, 1) + offsets(3);

xd = spread * randn(pointsPerCluster, 1) + offsets(4);

yd = spread * randn(pointsPerCluster, 1) + offsets(4);

x = [xa; xb; xc; xd];

y = [ya; yb; yc; yd];

xy = [x, y];

%-------------------------------------------------------------------------------------------------------------------------------------------

% K-MEANS CLUSTERING.

% Now do kmeans clustering.

% Determine what the best k is:

evaluationObject = evalclusters(xy, 'kmeans', 'DaviesBouldin', 'klist', [3:10])

% Do the kmeans with that k:

[assignedClass, clusterCenters] = kmeans(xy, evaluationObject.OptimalK);

clusterCenters % Echo to command window

% Do a scatter plot with the original class numbers assigned by kmeans.

hfig1 = figure;

subplot(1, 2, 1);

gscatter(x, y, assignedClass);

legend('FontSize', fontSize, 'Location', 'northwest');

grid on;

xlabel('x', 'fontSize', fontSize);

ylabel('y', 'fontSize', fontSize);

title('Original Class Numbers Assigned by kmeans()', 'fontSize', fontSize);

% Plot the class number labels on top of the cluster.

hold on;

for row = 1 : size(clusterCenters, 1)

text(clusterCenters(row, 1), clusterCenters(row, 2), num2str(row), 'FontSize', 50, 'FontWeight', 'bold', 'HorizontalAlignment', 'center', 'VerticalAlignment', 'middle');

end

hold off;

hfig1.WindowState = 'maximized'; % Maximize the figure window so that it takes up the full screen.

%-------------------------------------------------------------------------------------------------------------------------------------------

% SORTING ALGORITHM

% Sort the clusters according to how far each cluster center is from the origin.

% First get the distance of each cluster center (as reported by the kmeans function) from the origin.

distancesFromOrigin = sqrt(clusterCenters(:, 1) .^ 2 + clusterCenters(:, 2) .^2)

%-------------------------------------------------------------------------------------------------------------------------------------------

% NOW GET NEW CLASS NUMBERS ACCORDING TO THAT SORTING ALGORITHM.

% Now, say for example, that you want to give the classes numbers according to how from from the origin they are.

% Determine what the new order to sort them in should be:

[sortedDistances, sortOrder] = sort(distancesFromOrigin, 'ascend') % Sort x values of centroids.

% Get new class numbers for each point since, for example,

% what used to be class 4 will now be class 1 since class 4 is closest to the origin.

% (The actual numbers may change for each run since kmeans is based on random initial sets.)

% Instantiate a vector that will tell each point what it's new class number will be.

newClassNumbers = zeros(length(x), 1);

% For each class, find out where it is

for k = 1 : size(clusterCenters, 1)

% First find out what points have this current class,

% and where they are by creating this logical vector.

currentClassLocations = assignedClass == k;

% Now assign all of those locations to their new class.

newClassNumber = find(k == sortOrder); % Find index in sortOrder where this class number appears.

fprintf('Initially the center of cluster %d is (%.2f, %.2f), %.2f from the origin.\n', ...

k, clusterCenters(k), clusterCenters(k), distancesFromOrigin(k));

fprintf(' Relabeling all points in initial cluster #%d to cluster #%d.\n', k, newClassNumber);

% Do the relabeling right here:

newClassNumbers(currentClassLocations) = newClassNumber;

end

% Plot the clusters with their new labels and colors.

subplot(1, 2, 2);

gscatter(x, y, newClassNumbers);

grid on;

xlabel('x', 'fontSize', fontSize);

ylabel('y', 'fontSize', fontSize);

title('Class Numbers Ranked by Distance', 'fontSize', fontSize);

legend('FontSize', fontSize, 'Location', 'northwest');

% Basically, we're done now.

%-------------------------------------------------------------------------------------------------------------------------------------------

% DOUBLE CHECK, VERIFICATION, PROOF.

% To verify, let's get the mean (x,y) of each class after the relabeling.

fprintf('Now, after relabeling:\n');

for k = 1 : size(clusterCenters, 1)

% First find out what points have this class.

% and where they are by creating this logical vector.

currentClassLocations = newClassNumbers == k;

% Now assign all of those locations to their new class.

meanx(k) = mean(x(currentClassLocations));

meany(k) = mean(y(currentClassLocations));

fprintf('The center of cluster %d is (%.2f, %.2f).\n', k, meanx(k), meany(k));

end

% cc = [assignedClass, newClassNumbers]; % Class assignments, side-by-side.

% Plot the class number labels on top of the cluster.

hold on;

for row = 1 : size(clusterCenters, 1)

text(meanx(row), meany(row), num2str(row), 'FontSize', 50, 'FontWeight', 'bold', 'HorizontalAlignment', 'center', 'VerticalAlignment', 'middle');

end

hold off;

%===========================================================================================================================================

% DEMO #2 : RELABEL ACCORDING TO NUMBER OF POINTS IN THE CLUSTER.

%===========================================================================================================================================

%-------------------------------------------------------------------------------------------------------------------------------------------

% FIRST CREATE SAMPLE DATA.

% Make up 4 clusters with 150 points each.

pointsPerCluster = [300, 1000, 100, 500];

spread = 0.03;

offsets = [0.3, 0.5, 0.7, 0.9];

% offsets = [0.62, 0.73, 0.84, 0.95];

xa = spread * randn(pointsPerCluster(1), 1) + offsets(1);

ya = spread * randn(pointsPerCluster(1), 1) + offsets(1);

xb = spread * randn(pointsPerCluster(2), 1) + offsets(2);

yb = spread * randn(pointsPerCluster(2), 1) + offsets(2);

xc = spread * randn(pointsPerCluster(3), 1) + offsets(3);

yc = spread * randn(pointsPerCluster(3), 1) + offsets(3);

xd = spread * randn(pointsPerCluster(4), 1) + offsets(4);

yd = spread * randn(pointsPerCluster(4), 1) + offsets(4);

x = [xa; xb; xc; xd];

y = [ya; yb; yc; yd];

xy = [x, y];

%-------------------------------------------------------------------------------------------------------------------------------------------

% K-MEANS CLUSTERING.

% Now do kmeans clustering.

[assignedClass, clusterCenters] = kmeans(xy, 4);

clusterCenters % Echo to command window

% Do a scatter plot with the original class numbers assigned by kmeans.

hfig2 = figure;

subplot(1, 2, 1);

gscatter(x, y, assignedClass);

legend('FontSize', fontSize, 'Location', 'northwest');

grid on;

xlabel('x', 'fontSize', fontSize);

ylabel('y', 'fontSize', fontSize);

title('Original Class Numbers Assigned by kmeans()', 'fontSize', fontSize);

% Plot the class number labels on top of the cluster.

hold on;

for row = 1 : size(clusterCenters, 1)

text(clusterCenters(row, 1), clusterCenters(row, 2), num2str(row), 'FontSize', 50, 'FontWeight', 'bold', 'HorizontalAlignment', 'center', 'VerticalAlignment', 'middle');

end

hold off;

hfig2.WindowState = 'maximized'; % Maximize the figure window so that it takes up the full screen.

%-------------------------------------------------------------------------------------------------------------------------------------------

% SORTING ALGORITHM

% Sort the clusters according to how far each cluster center is from the origin.

% First get the distance of each cluster center (as reported by the kmeans function) from the origin.

for k = 1 : size(clusterCenters, 1)

pointsAssignedToThisCluster(k) = sum(assignedClass == k);

end

%-------------------------------------------------------------------------------------------------------------------------------------------

% NOW GET NEW CLASS NUMBERS ACCORDING TO THAT SORTING ALGORITHM.

% Now, say for example, that you want to give the classes numbers according to how from from the origin they are.

% Determine what the new order to sort them in should be:

[sortedDistances, sortOrder] = sort(pointsAssignedToThisCluster, 'ascend') % Sort x values of centroids.

% Get new class numbers for each point since, for example,

% what used to be class 4 will now be class 1 since class 4 is closest to the origin.

% (The actual numbers may change for each run since kmeans is based on random initial sets.)

% Instantiate a vector that will tell each point what it's new class number will be.

newClassNumbers = zeros(length(x), 1);

% For each class, find out where it is

for k = 1 : size(clusterCenters, 1)

% First find out what points have this current class,

% and where they are by creating this logical vector.

currentClassLocations = assignedClass == k;

% Now assign all of those locations to their new class.

newClassNumber = find(k == sortOrder); % Find index in sortOrder where this class number appears.

fprintf('Initially the center of cluster %d is (%.2f, %.2f), with %d points in that cluster.\n', ...

k, clusterCenters(k), clusterCenters(k), pointsAssignedToThisCluster(k));

fprintf(' Relabeling all points in initial cluster #%d to cluster #%d.\n', k, newClassNumber);

% Do the relabeling right here:

newClassNumbers(currentClassLocations) = newClassNumber;

end

% Plot the clusters with their new labels and colors.

subplot(1, 2, 2);

gscatter(x, y, newClassNumbers);

grid on;

xlabel('x', 'fontSize', fontSize);

ylabel('y', 'fontSize', fontSize);

title('Class Numbers Ranked by Cluster Size', 'fontSize', fontSize);

legend('FontSize', fontSize, 'Location', 'northwest');

% Basically, we're done now.

%-------------------------------------------------------------------------------------------------------------------------------------------

% DOUBLE CHECK, VERIFICATION, PROOF.

% To verify, let's get the mean (x,y) of each class after the relabeling.

fprintf('Now, after relabeling:\n');

for k = 1 : size(clusterCenters, 1)

% First find out what points have this class.

% and where they are by creating this logical vector.

currentClassLocations = newClassNumbers == k;

% Now assign all of those locations to their new class.

meanx(k) = mean(x(currentClassLocations));

meany(k) = mean(y(currentClassLocations));

fprintf('The center of cluster %d is (%.2f, %.2f).\n', k, meanx(k), meany(k));

end

% cc = [assignedClass, newClassNumbers]; % Class assignments, side-by-side.

% Plot the class number labels on top of the cluster.

hold on;

for row = 1 : size(clusterCenters, 1)

text(meanx(row), meany(row), num2str(row), 'FontSize', 50, 'FontWeight', 'bold', 'HorizontalAlignment', 'center', 'VerticalAlignment', 'middle');

end

hold off;