You can use Classification Learner to train models of these classifiers: decision trees, discriminant analysis, support vector machines, logistic regression, nearest neighbors, naive Bayes, and ensemble classification. In addition to training models, you can explore your data, select features, specify validation schemes, and evaluate results. You can export a model to the workspace to use the model with new data or generate MATLAB® code to learn about programmatic classification.

Training a model in Classification Learner consists of two parts:

-

Validated Model: Train a model with a validation scheme. By default, the app protects against overfitting by applying cross-validation. Alternatively, you can choose holdout validation. The validated model is visible in the app.

-

Full Model: Train a model on full data without validation. The app trains this model simultaneously with the validated model. However, the model trained on full data is not visible in the app. When you choose a classifier to export to the workspace, Classification Learner exports the full model.

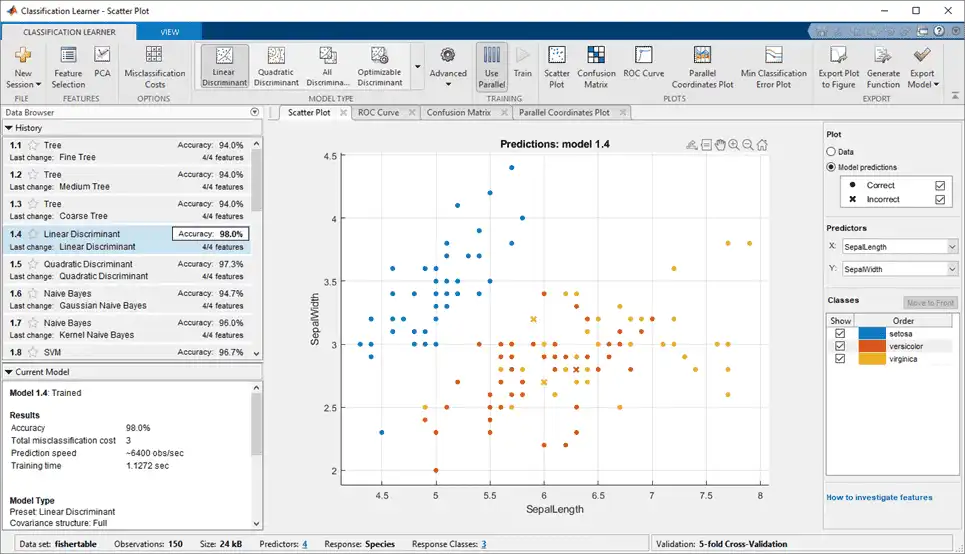

The app displays the results of the validated model. Diagnostic measures, such as model accuracy, and plots, such as a scatter plot or the confusion matrix chart, reflect the validated model results. You can automatically train a selection of or all classifiers, compare validation results, and choose the best model that works for your classification problem. When you choose a model to export to the workspace, Classification Learner exports the full model. Because Classification Learner creates a model object of the full model during training, you experience no lag time when you export the model. You can use the exported model to make predictions on new data.

To get started by training a selection of model types, see Automated Classifier Training. If you already know what classifier type you want to train, see Manual Classifier Training.

Automated Classifier Training

You can use Classification Learner to automatically train a selection of different classification models on your data.

-

Get started by automatically training multiple models at once. You can quickly try a selection of models, then explore promising models interactively.

-

If you already know what classifier type you want, train individual classifiers instead. See Manual Classifier Training.

-

On the Apps tab, in the Machine Learning group, click Classification Learner.

-

Click New Session and select data from the workspace or from file. Specify a response variable and variables to use as predictors. See Select Data and Validation for Classification Problem.

-

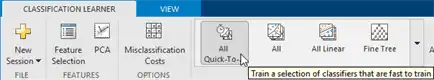

On the Classification Learner tab, in the Model Type section, click All Quick-To-Train. This option will train all the model presets available for your data set that are fast to fit.

-

Click Train

.

.Note

The app trains models in parallel if you have Parallel Computing Toolbox™. See Parallel Classifier Training.

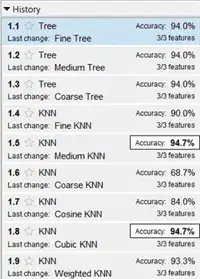

A selection of model types appears in the History list. When they finish training, the best percentage Accuracy score is highlighted in a box.

-

Click models in the history list to explore results in the plots.

For next steps, see Manual Classifier Training or Compare and Improve Classification Models.

-

To try all the nonoptimizable classifier model presets available for your data set, click All, then click Train.

Manual Classifier Training

If you want to explore individual model types, or if you already know what classifier type you want, you can train classifiers one at a time, or a train a group of the same type.

-

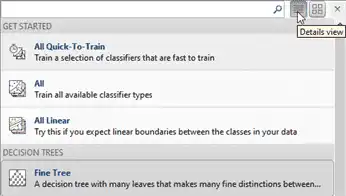

Choose a classifier. On the Classification Learner tab, in the Model Type section, click a classifier type. To see all available classifier options, click the arrow on the far right of the Model Type section to expand the list of classifiers. The nonoptimizable model options in the Model Type gallery are preset starting points with different settings, suitable for a range of different classification problems.

To read a description of each classifier, switch to the details view.

For more information on each option, see Choose Classifier Options.

-

After selecting a classifier, click Train.

Repeat to try different classifiers.

Tip

Try decision trees and discriminants first. If the models are not accurate enough predicting the response, try other classifiers with higher flexibility. To avoid overfitting, look for a model of lower flexibility that provides sufficient accuracy.

-

If you want to try all nonoptimizable models of the same or different types, then select one of the All options in the Model Type gallery.

Alternatively, if you want to automatically tune hyperparameters of a specific model type, select the corresponding Optimizable model and perform hyperparameter optimization. For more information, see Hyperparameter Optimization in Classification Learner App.

For next steps, see Compare and Improve Classification Models

Parallel Classifier Training

You can train models in parallel using Classification Learner if you have Parallel Computing Toolbox. When you train classifiers, the app automatically starts a parallel pool of workers, unless you turn off the default parallel preference Automatically create a parallel pool. If a pool is already open, the app uses it for training. Parallel training allows you to train multiple classifiers at once and continue working.

-

The first time you click Train, you see a dialog while the app opens a parallel pool of workers. After the pool opens, you can train multiple classifiers at once.

-

When classifiers are training in parallel, you see progress indicators on each training and queued model in the history list, and you can cancel individual models if you want. During training, you can examine results and plots from models, and initiate training of more classifiers.

To control parallel training, toggle the Use Parallel button in the app toolstrip. The Use Parallel button is only available if you have Parallel Computing Toolbox.

If you have Parallel Computing Toolbox, then parallel training is available in Classification Learner, and you do not need to set the UseParallel option of the statset function. If you turn off the parallel preference to Automatically create a parallel pool, then the app will not start a pool for you without asking first.

Note

You cannot perform hyperparameter optimization in parallel. The app disables the Use Parallel button when you select an optimizable model. If you then select a nonoptimizable model, the button is off by default.

Compare and Improve Classification Models

-

Click models in the history list to explore the results in the plots. Compare model performance by inspecting results in the scatter plot and confusion matrix. Examine the percentage accuracy reported in the history list for each model. See Assess Classifier Performance in Classification Learner.

-

Select the best model in the history list and then try including and excluding different features in the model. Click Feature Selection.

Try the parallel coordinates plot to help you identify features to remove. See if you can improve the model by removing features with low predictive power. Specify predictors to include in the model, and train new models using the new options. Compare results among the models in the history list.

You can also try transforming features with PCA to reduce dimensionality.

See Feature Selection and Feature Transformation Using Classification Learner App.

-

To improve the model further, you can try changing classifier parameter settings in the Advanced dialog box, and then train using the new options. To learn how to control model flexibility, see Choose Classifier Options. For information on how to tune model parameter settings automatically, see Hyperparameter Optimization in Classification Learner App.

-

If feature selection, PCA, or new parameter settings improve your model, try training All model types with the new settings. See if another model type does better with the new settings.

Tip

To avoid overfitting, look for a model of lower flexibility that provides sufficient accuracy. For example, look for simple models such as decision trees and discriminants that are fast and easy to interpret. If the models are not accurate enough predicting the response, choose other classifiers with higher flexibility, such as ensembles. To learn about the model flexibility, see Choose Classifier Options.

The figure shows the app with a history list containing various classifier types.